Vert.x is an event driven and non blocking “Platform” where we can develop our application on. Vert.x is like Node.JS, but the biggest difference is that it runs on the JVM, it’s scalable, concurrent, non-blocking, distributed and polyglot.

Following four key properties of Vert.x helps us in developing reactive applications.

- Async and non-blocking model

- Elastic

- Resilient

- Responsive

Async and non-blocking model

None of the Vert.x APIs block the calling thread(with few exceptions). If the result can be found quickly, It will be returned; Otherwise it will be handled by a handler to receive event sometime later.

vertx

.createHttpServer()

.requestHandler(r -> {

r.response()

.end("<h1>Hello from my first " + "Vert.x 3 application</h1>"); })

.listen(8080, result -> {

if (result.succeeded()) {

fut.complete();

} else {

fut.fail(result.cause());

}

});

.createHttpServer()

.requestHandler(r -> {

r.response()

.end("<h1>Hello from my first " + "Vert.x 3 application</h1>"); })

.listen(8080, result -> {

if (result.succeeded()) {

fut.complete();

} else {

fut.fail(result.cause());

}

});

We can handle a highly concurrent work load using a small number of threads. In most of the cases Vert.x calls handler using a thread call event loop.

hence the golden rule: Don’t block the event loop

Since nothing blocks event loop, Vert.x can handle a huge number of events in short amount of time. This is called reactor pattern.

Node.js is single threaded, It can run only on one thread at a time. Vert.x works differently here. Instead of a single loop Vert.x instance maintains several event loops. This is called multi-reactor pattern.

In addition, Vert.x offers a various set of non-blocking API. We can use Vert.x for non-blocking web operations (based upon Netty), filesystem operations, data accesses (for instance JDBC, MongoDB and PostgreSQL) etc…Elastic

Vert.x apps can react to increasing load well, Because the architecture highly concurrent and distributed. Vert.x also take advantage of multi-core CPUs.

Resilient

Vert.x applications are also resilient, treating failure as a first-class citizen --- it can face failures, isolate them, and implement recovery strategies easily.In Micro-service architecture, Every service can fail, every interaction between services can also fail.

Vert.x comes with a set of resilience pattern.

Vert.x Circuit Breaker is an implementation of the Circuit Breaker pattern for Vert.x.

It keeps track of the number of failures and opens the circuit when a threshold is reached. Optionally, a fallback is executed.

Supported failures are:

- failures reported by your code in a Future

- exception thrown by your code

- uncompleted futures (timeout)

Hystrix provides an implementation of the circuit breaker pattern. You can use Hystrix with Vert.x instead of this circuit breaker or in combination of.

Responsive

The final property, responsive, means the application is real-time and engaging. It continues to provide its service in a timely-fashion even when the system is facing failures or peak of demand.

Verticles

Vert.x proposes a model based upon autonomous and weakly-coupled components.

This type of component is called in Vert.x a Verticle and can be written in the different languages we have seen. Each verticle has its own classloader.

The Verticle approach has some similarities with the Actor model but is not full implementation of it.

Each Vertical runs in its own single thread and shares no state with other Verticals. To scale the Verticals, Vert.x provides an “instance” flag or option for this. This option tells Vert.x how many Vertical Instances should be deployed. In other words, how many threads will there be created for each deployed Vertical and that will act concurrently! Vert.x will load balance the workload between the available instances (threads) of a particular Vertical. This way you can effectively and efficiently use all the available horsepower of the server where it runs on. We could also easily scale Verticals to match increasing performance requirements to handle large amount of requests concurrently!

Event loop

We already know that the Vert.x APIs are non blocking and won’t block the event loop, but that’s not much help if we block the event loop yourself in a handler.

Examples of blocking include:

- Thread.sleep()

- Waiting on a lock

- Waiting on a mutex or monitor (e.g. synchronized section)

- Doing a long lived database operation and waiting for a result

- Doing a complex calculation that takes some significant time.

- Spinning in a loop

So… what is a significant amount of time?

If you have a single event loop, and you want to handle 10000 http requests per second, then it’s clear that each request can’t take more than 0.1 ms to process, so you can’t block for any more time than that.

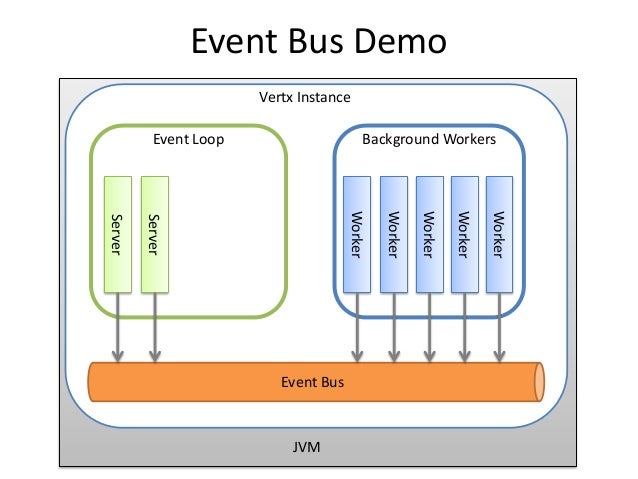

Event bus

The event bus is the nervous system of Vert.x.There is a single event bus instance for every Vert.x instance and it is obtained using the method eventBus.

The event bus allows different parts of your application to communicate with each other irrespective of what language they are written in, and whether they’re in the same Vert.x instance, or in a different Vert.x instance.

The event bus supports publish/subscribe, point to point, and request-response messaging.

Comments

Post a Comment